Have you ever thought why do Kubernetes use Pod as the smallest deployable unit? Why don't we use containers instead of Pods? Why would we even need to run multiple containers together? Can’t we put all our processes into a single container? In this article I will try to answer these questions.

As we know Kubernetes uses Pods, rather than individual containers, as the smallest deployable units that can be created, scheduled, and managed. This design decision in fact is motivated by several factors, including design principles, operational efficiency, and application architecture considerations.

Below is the list of some points to consider why do we need to have Pods instead of containers.

Grouping Related Containers

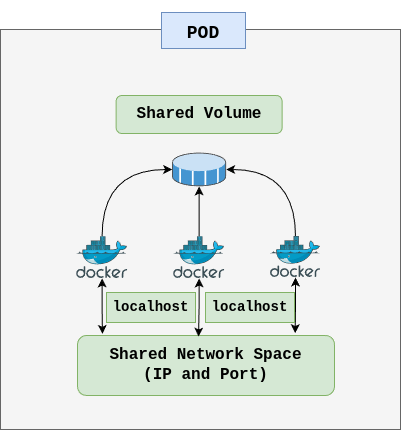

Shared Networking: Containers in the same pod share the same IP address and port space, which means they can communicate with each other using

localhost. This is particularly useful for containers that need to communicate closely with each other, such as a main application and a sidecar that logs or processes the main application's data.For more information, see Container Communication within Kubernetes Pods: Shared IP and Ports

Shared Storage Volumes: Pods allow containers to share storage volumes, enabling data to be passed easily between containers. This is useful for containers that need to access the same set of files, such as an application container and a container that processes files generated by the application.

Tightly Coupled Applications: Often, applications consist of multiple components that work closely together. A web app might have a web server container, a database container, and a cache container. Grouping these into a pod ensures they're always co-located and share the same network, simplifying communication and coordination.

Resource Sharing and Management

Efficient Resource Use: By grouping closely related containers that serve a single atomic unit of the application, Kubernetes can more efficiently manage resources. This setup allows for better utilization of resources, as the overhead of running an extra copy of the operating system (which would be the case with VMs) is avoided.

Simplified Resource Allocation: When containers are grouped in a pod, it's easier to manage resources for the group as a whole. Kubernetes can allocate resources like CPU and memory to pods rather than to individual containers, simplifying resource allocation and management.

Simplifying Application Deployment and Management

Atomic Deployment and Scaling: A pod lives and dies as a unit. If one container crashes, Kubernetes can restart the entire pod, ensuring all components come back online together, crucial for maintaining application health.

Support for Microservices Architecture: Pods allow for the microservices architecture, where each microservice runs in its own set of containers but within the same pod if they need to work closely together. This supports both the separation of concerns and the tight coupling (when necessary) between components.

Encapsulation and Security

Isolation: While containers in a pod share certain resources, they can still be isolated in terms of CPU and memory limits. Kubernetes provides namespaces, control groups, and other mechanisms to ensure that containers are securely isolated.

Security Context: Kubernetes allows you to define security settings at the pod level, which simplifies the management of access controls and security policies for containers that need to operate under the same security context. A security context defines privilege and access control settings for a Pod or Container.

Why Not To Use a Single Container For All Processes?

Running all processes in a single container contradicts the container design philosophy, which advocates for one process per container. This approach ensures that each container is lightweight, easy to manage, and scalable. It also simplifies the process of updating, maintaining, and troubleshooting containers, as each container is limited to a single responsibility.

Other reasons to consider are:

Complexity: One large container becomes harder to manage, debug, and update.

Security: Mixing processes increases the attack surface, potentially compromising the entire application.

Resource Isolation: Different components might have varying resource needs. Packing everything together can lead to inefficient resource allocation.

In summary, Kubernetes pods offer a more flexible, efficient, and scalable way to deploy and manage applications than using individual containers directly. Pods facilitate the microservices architecture, improve resource utilization, and simplify operational tasks, making them a fundamental component of the Kubernetes ecosystem.